Before learning docker. First, we should clarify why we need docker and some old-school development processes.

The bad old days

Applications run businesses. If applications break, businesses suffer and sometimes go away. These statements get truer every day!

Most applications run on servers. In the past, we could only run one application per server. The open-systems world of Windows and Linux just didn’t have the technologies to safely and securely run multiple applications on the same server.

So, the story usually went something like this... Every time the business needed a new application, IT would go out and buy a new server. And most of the time nobody knew the performance requirements of the new application! This meant IT had to make guesses when choosing the model and size of servers to buy.

As a result, IT did the only thing it could do - it bought big fast servers with lots of resiliency. Under-powered servers might be unable to execute transactions, which might result in lost customers and lost revenue. So, IT usually bought bigger servers than were actually needed. This resulted in huge numbers of servers operating as low as 5-10% of their potential capacity. A tragic waste of company capital and resources!

Hello VMVare!

VMware, Inc. gave the world a gift - the virtual machine (VM). We finally had a technology that would let us safely and securely run multiple business applications on a single server.

All of a sudden, we could squeeze massive amounts of value out of existing corporate assets, such as servers, resulting in a lot more bang for the company’s buck.

But... and there’s always a but! As great as VMs are, they’re not perfect!

The fact that every VM requires its own dedicated OS is a major flaw. Every OS consumes CPU, RAM and storage that could otherwise be used to power more applications. Every OS needs patching and monitoring. VMs are slow to boot and portability isn’t great - migrating and moving VM workloads between hypervisors and cloud platforms is harder than it needs to be.

Hello, Containers!

For a long time, big web-scale players like Google have been using container technologies to address these shortcomings of the VM model.

In the container model, the container is roughly analogous to the VM. The major difference though, is that every container does not require a full-blown OS. In fact, all containers on a single host share a single OS. This frees up huge amounts of system resources such as CPU, RAM, and storage.

Containers are also fast to start and ultra-portable. Moving container workloads from your laptop to the cloud, and then to VMs or bare metal in your data center is a breeze.

A container is a sandboxed process running on a host machine that is isolated from all other processes running on that host machine.

Linux containers

Modern containers started in the Linux world and are the product of an immense amount of work from a wide variety of people over a long period of time. Just as one example, Google Inc. has contributed many container-related technologies to the Linux kernel. Without these and other contributions, we wouldn’t have

modern containers today.

Some of the major technologies that enabled the massive growth of containers in recent years include kernel namespaces, control groups, and of course Docker. The modern container ecosystem is deeply indebted to the many individuals and organizations that laid the strong foundations that we currently build on!

Windows containers

Over the past few years, Microsoft Corp. has worked extremely hard to bring Docker and container technologies to the Windows platform.

In achieving this, Microsoft has worked closely with Docker, Inc.

The core Windows technologies required to implement containers are collectively referred to as Windows Containers. The user-space tooling to work with these Windows Containers is Docker. This makes the Docker experience on Windows almost the same as Docker on Linux. This way developers and sysadmins familiar with the Docker toolset from the Linux platform will feel at home using Windows containers.

Windows containers vs. Linux containers

It’s vital to understand that a running container uses the kernel of the host machine it is running on. This means that a container designed to run on a host with a Windows kernel will not run on a Linux host. This means that you can think of it like this at a high level - Windows containers require a Windows Host, and Linux containers require a Linux host.

Namespaces

Namespaces have been part of the Linux kernel since about 2002, Real container support was added to Linux kernel only in 2013.

Namespaces are a feature of the Linux kernel that partitions kernel resources such that one set of processes sees one set of resources while another set of processes sees a different set of resources

On a server, you are running different services, and isolating each service and its associated processes from other services means that there is a smaller blast radius for changes as well as a smaller footprint for security-related concerns.

Linux kernel has different types of namespaces:

User namespace: own set of user IDs and group IDs for assignment to processes.

Process(PID) namespace: a set of PIDs to processes that are independent of the set of PIDs in other namespace

Network namespace: has an independent network stack

Mount namespace: has an independent list of mount points seen by the processes in the namespace. This means that you can mount and unmount filesystems in a mount namespace without affecting the host filesystem

UNIX Time-sharing namespace: allows a single system to appear to have a different host and domain name for different processes

cgroups

A control group (cgroup) is a Linux kernel feature that limits, accounts for, and isolates the resource usage(CPU, memory, disk I/O, network and so on) of a collection of processes.

Cgroups provide the following features:

Resource limit: Configure a cgroup to limit how much of a particular resource a process can use

Prioritization: Control how many resources a process can use compared to processes in another cgroup

Accounting: Resource limits are monitored and reported at the cgroup level

Control: can change the status(Frozen, Stopped, Restarted) of all processes in a group with a single command

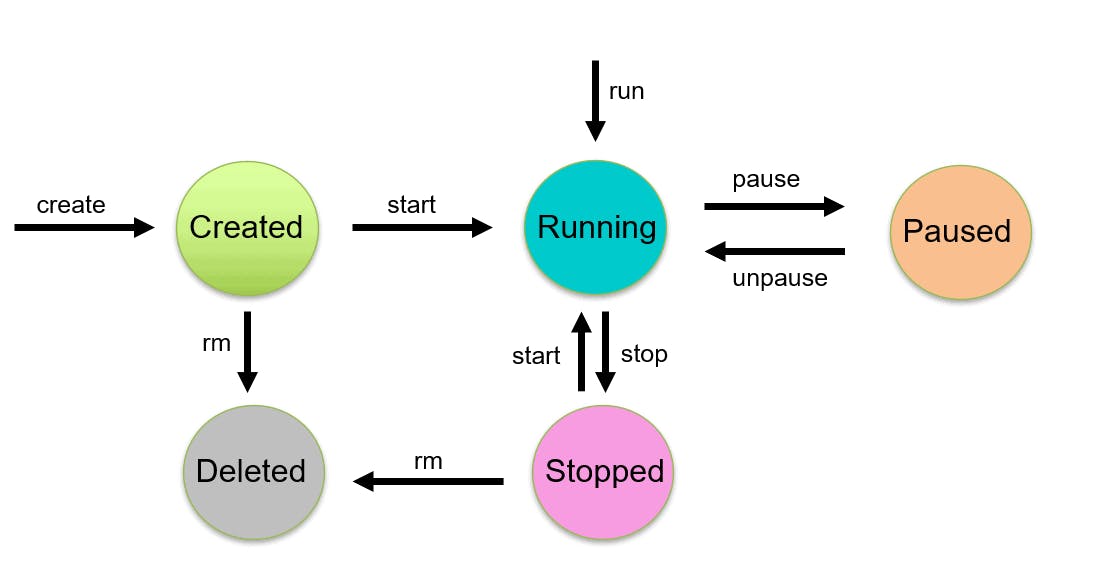

Lifecycle of container

We have 5 phases of container

Created

Running

Paused

Stopped

Deleted

We will deep dive with tons of examples for each of the above phases in the upcoming episodes

`